An Illustrated Guide to Amazon S3

Amazon S3

Amazon S3 (Simple Storage Service) is a scalable and highly durable cloud storage service provided by Amazon Web Services (AWS). It allows users to store and retrieve data, such as files, documents, images, and videos, over the internet. S3 is widely used for data backup, content distribution, and as a foundation for various cloud-based applications.

In this illustrated guide, I shared my learnings and notes that I took on the Excalidraw tool while taking a course from Stephane Maarek.

Objects in S3

In AWS S3 (Simple Storage Service), objects are the fundamental units of storage, representing data files that can range from a few bytes to terabytes in size. Each object in S3 consists of data, a unique key (or identifier), and metadata, making it possible to store, retrieve, and manage data efficiently within S3 buckets.

Using AWS S3 to Host Static Websites

Hosting a static website using AWS S3 is a cost-effective and straightforward solution. By configuring an S3 bucket to act as a static website host and properly setting up bucket policies and permissions, you can serve HTML, CSS, JavaScript, and other web files to users with low-latency access and high availability, utilizing AWS's global infrastructure.

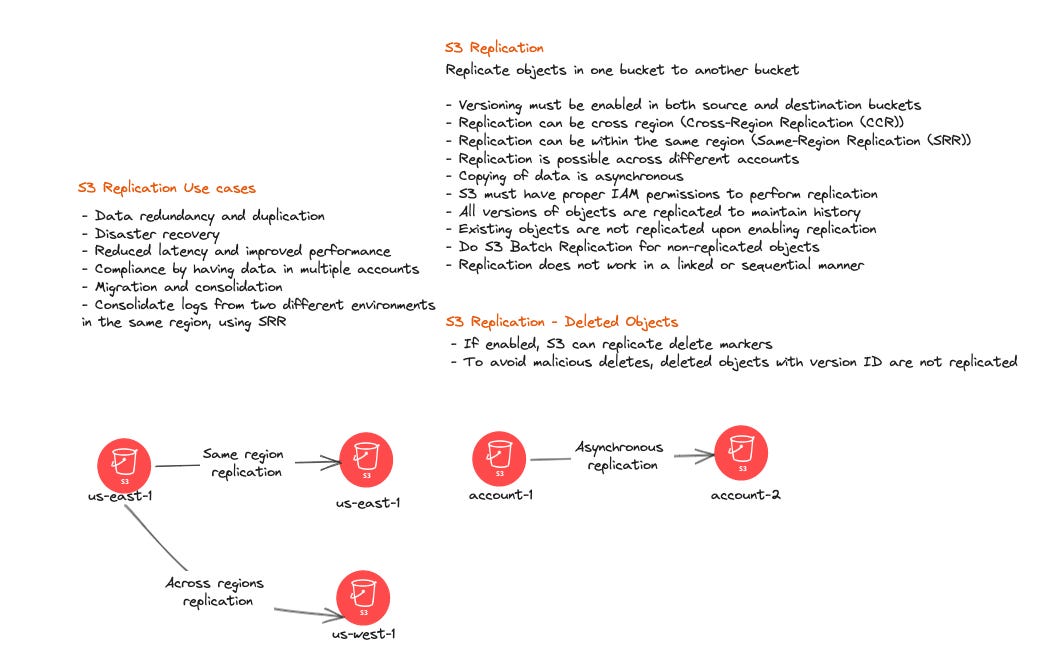

Replication in AWS S3

Replication of objects in AWS S3 is a feature that allows you to automatically and asynchronously copy objects (files) from one S3 bucket to another, either within the same AWS region or across different AWS regions. This replication process can help improve data durability, availability, and compliance. It provides redundancy by ensuring that your data is stored in multiple geographic locations, reducing the risk of data loss due to regional failures, and enabling disaster recovery capabilities. Additionally, object replication can be used for compliance and data residency requirements by replicating data to regions that meet specific regulatory criteria.

Classes in AWS S3

Storage classes in AWS S3 are different data storage options designed to meet specific performance, cost, and durability requirements. AWS offers a range of storage classes, including Standard General Purpose, Intelligent-Tiering, Standard-IA (Infrequent Access), One Zone-IA, Glacier Instant Retrieval, Glacier Flexible Retrieval, and Glacier Deep Archive. Each storage class is optimized for various use cases, allowing users to balance cost-efficiency with data availability and retrieval speed. These storage classes enable organizations to tailor their data storage strategies to align with the specific needs of their applications and data access patterns.

S3 Lifecycle rules

Lifecycle rules in AWS S3 are a feature that enables you to automate the management of objects (files) stored in S3 buckets over time. With lifecycle rules, you can define actions to be taken on objects based on criteria like object age, storage class, or specific tags. Some common actions include transitioning objects to different storage classes (e.g., moving from Standard to Glacier), deleting objects, or applying versioning. By setting up lifecycle rules, you can optimize storage costs, enforce data retention policies, and ensure that your S3 storage is efficiently managed as objects evolve through their lifecycle.

You can also use S3 Analytics to transition objects from one class to another.

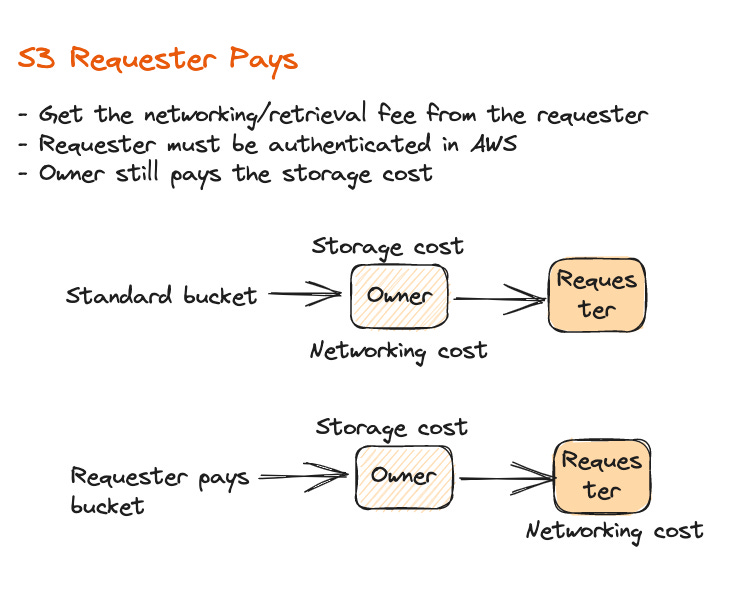

Requester Pay Buckets

Requester Pays buckets in AWS S3 are S3 buckets for which the requester, rather than the bucket owner, incurs the costs associated with data transfer and requests. When a bucket is configured as a Requester Pays bucket, any external entity or user who wants to access objects within that bucket must pay for the associated data transfer and request costs. This allows bucket owners to share data stored in their buckets while shifting the cost responsibility to the requester.

Requester Pays buckets are commonly used in scenarios where data owners want to share their data but don't want to bear the cost of serving that data to external users. This feature is often employed for data sharing, public datasets, or scenarios where data consumers are expected to cover the associated costs. It provides a way to share data publicly while ensuring cost accountability for the data transfer and request expenses incurred by external users.

S3 Event Notifications

Event notifications in AWS S3 are a feature that allows you to set up automated actions or triggers in response to events that occur within your S3 buckets. These events can include actions such as object creation, deletion, or modification. When an event occurs, S3 can send notifications to various AWS services like AWS Lambda, Amazon Simple Queue Service (SQS), or Amazon Simple Notification Service (SNS), which can then trigger custom code or workflows.

For example, you can configure an event notification to trigger an AWS Lambda function whenever a new object is uploaded to an S3 bucket. This Lambda function could process the uploaded object, generate thumbnails, perform data validation, or trigger other actions based on your specific requirements.

Event notifications in S3 enable you to automate processes, integrate S3 with other AWS services, and build event-driven architectures, making it easier to manage and respond to changes in your S3 data.

S3 Performance

To boost performance, AWS offers various tools and features. Multi-part upload enhances efficiency for large file transfers by parallelizing uploads and reducing failures. S3 Transfer Acceleration leverages edge locations to expedite data transfers. Byte range fetches minimize latency by fetching only needed portions of objects. S3 Select optimizes query performance and reduces data transfer costs by retrieving specific data from objects, particularly useful for large datasets. These features collectively enhance the overall performance and cost-effectiveness of AWS services.

S3 Batch Operations

Batch operations in AWS S3 allow you to efficiently manage large numbers of objects within your S3 buckets. You can use batch operations to perform tasks like copying, deleting, tagging, and restoring multiple objects simultaneously, saving time and reducing manual effort. By leveraging S3 batch operations, you can automate and streamline data management tasks across extensive object sets, enhancing the scalability and efficiency of your S3-based applications.

AWS S3 Security

AWS S3 provides robust security features to safeguard data stored within its buckets. Bucket policies enable fine-grained access control, allowing administrators to define who can access objects and under what conditions.

Encryption is a critical aspect of S3 security, with options for encryption in transit using HTTPS and encryption at rest using server-side encryption mechanisms like SSE-S3, SSE-KMS, or SSE-Custom. These ensure data remains confidential and protected even during transmission and while at rest.

S3 also supports Cross-Origin Resource Sharing (CORS) configuration to control which web domains can access your resources, preventing unauthorized data access. Multi-Factor Authentication (MFA) delete requires additional authentication for critical operations, reducing the risk of accidental or unauthorized deletions.

Access logs allow users to monitor and audit S3 bucket access, providing detailed records of all requests, which can be invaluable for security and compliance purposes. In combination, these security features help ensure the confidentiality, integrity, and availability of data stored in AWS S3.

S3 Pre-signed URL

Pre-signed URLs in AWS S3 are temporary URLs generated with specific permissions that grant temporary access to objects in an S3 bucket. These URLs are often used to provide secure and time-limited access to private objects without requiring recipients to have AWS credentials, making them valuable for sharing files while maintaining control over access.

Locks on S3

Object Lock in AWS S3 is a feature that helps enforce retention policies on objects to protect them from being deleted or altered for a specified duration. It ensures data immutability and compliance with regulatory requirements.

Glacier Vault Lock is a feature within Amazon S3 Glacier, a long-term data archival service offered by AWS. Glacier Vault Lock allows you to enforce compliance controls on your archived data by configuring and applying a Vault Lock policy to a Glacier vault.

Set Multiple Access Points for S3

Multiple access points in Amazon S3 allow you to create network paths for specific applications or sets of users, simplifying access management to S3 buckets. They enable fine-grained access control, security, and customization for various use cases within a single S3 bucket, making it easier to segregate access and permissions based on application or user requirements.

S3 Object Lambdas

AWS S3 Object Lambdas is a serverless compute service that lets you process and transform data stored in S3 objects on-the-fly with custom code. It allows you to create dynamic, real-time transformations of S3 objects, making it easier to generate tailored content or perform actions like data redaction, image resizing, or data enrichment as objects are retrieved from S3.

Summary

In this comprehensive article, we've delved into the world of AWS S3, uncovering its powerful features and functionalities. From its fundamental role as a cloud storage service to its diverse applications across industries, Amazon S3 emerges as an indispensable tool in the tech landscape. Whether you're a beginner exploring its basics or a seasoned pro harnessing its capabilities, this article provides a thorough understanding of the AWS S3 ecosystem, making it an essential read for anyone navigating the cloud storage terrain.