Monitoring

Monitoring, at its core, is about keeping a watchful eye on a software system's health, performance, and overall functionality. It's the digital supervisor, constantly observing and reporting on the condition of your applications.

The way Google Site Reliability Engineering (SRE) teams define monitoring as:

Collecting, processing, aggregating, and displaying real-time quantitative data about a system, such as query counts and types, error counts and types, processing times, and server lifetimes.

Monitoring isn't just a checkbox on the software operations to-do list; it's a practical tool for spotting potential problems and keeping things running smoothly. By actively keeping an eye on various parameters, we gain insights into potential glitches, patterns, and trends, helping us make informed decisions.

Why Monitoring Is Important

With monitoring of the software systems in place, you can answer a long list of many key questions, such as:

What are the peak usage times of my system

How many are my Daily Active Users and when and how fast did they grow?

What is the latency on incoming requests from different geographical locations?

Which database queries are taking longer than average?

What is the health of each service, when did it stop working, when did it throw errors?

What are the most common errors occurring in the system and where do they originate from?

What is the utilization of my resources, can I optimize them for optimal output?

Which functionality is more likely to break based on existing trends?

What is the forecasted future state of my application health and usage?

For any software operating at a production level, implementing robust monitoring is imperative. It serves as the compass guiding the software's health, preventing a state of uncertainty and ensuring customers are not left in the dark.

Monitoring Vocabulary You Need To Know

Alert:

Definition: An automated notification triggered by a predefined condition or threshold, signaling a potential issue that requires attention.

Example: Receiving an alert when server response time exceeds a specified limit.

Threshold:

Definition: A predefined value that, when surpassed, triggers an alert or other designated action.

Example: Setting a threshold for CPU utilization at 90% to be notified when the system is nearing its processing capacity.

Incident:

Definition: An unplanned event that disrupts normal operations, often requiring investigation and resolution.

Example: A sudden increase in error rates leading to an incident requiring immediate attention.

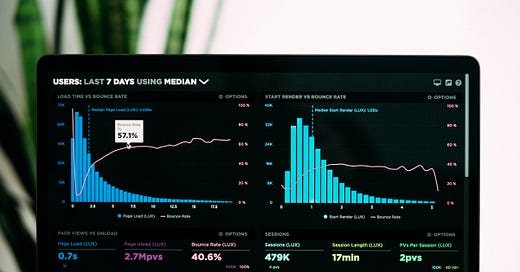

Dashboard:

Definition: A visual representation of key metrics and performance indicators, providing a comprehensive overview of the system's status.

Example: An application dashboard displaying real-time data on user activity, response times, and error rates.

Metric:

Definition: A quantifiable measure used to assess the performance, health, or behavior of a system.

Example: Monitoring response time, CPU utilization, and error rates as key metrics.

Logging:

Definition: The process of recording events, activities, or messages generated by a system for future analysis or troubleshooting. Logging is append only, that means historical data doesn’t change.

Example: Logging user login attempts and errors for security analysis and auditing.

Latency:

Definition: The time it takes for a system to respond to a request or perform an action.

Example: Measuring the latency between initiating a request and receiving a response.

Downtime:

Definition: The period during which a system or service is unavailable or not functioning as expected.

Example: Scheduled maintenance causing planned downtime or unexpected issues leading to unplanned downtime.

Scalability:

Definition: The ability of a system to handle increased load or demand by efficiently allocating resources.

Example: Optimizing an application for scalability to ensure it performs well as the user base or data volume grows.

Uptime:

Definition: The percentage of time a system or service is operational and available.

Example: A service with 99.9% uptime is available for approximately 99.9% of the time.

Performance Tuning:

Definition: The process of optimizing a system's configuration and settings to enhance its efficiency and responsiveness.

Example: Adjusting database indexes or optimizing code for improved performance.

Baseline:

Definition: A reference point representing normal or expected behavior for system metrics, helping identify deviations and potential issues.

Example: Establishing a baseline for average response time under normal operating conditions.

Capacity Planning:

Definition: The process of determining the resources needed to meet current and future demands.

Example: Planning server capacity to accommodate growth in users or data.

Anomaly Detection:

Definition: The identification of deviations or irregularities in system behavior that may indicate potential issues.

Example: Anomaly detection algorithms flagging unusual patterns, such as a sudden spike in network traffic.

SLA (Service Level Agreement):

Definition: A formal agreement outlining the expected level of service, including performance metrics and uptime guarantees.

Example: An SLA stipulating that response times should not exceed a certain threshold.

SLO (Service Level Objective):

Definition: A specific, measurable target for a service level that is part of an SLA, defining acceptable performance.

Example: Setting an SLO for response times at 99.9% of requests completing within 100 milliseconds.

Monitoring Metrics

Now, let's talk about what really matters in monitoring - the metrics. Monitoring involves keeping tabs on a range of metrics, each offering a specific view of your software's health. Here are some high-level metric groups:

Performance Metrics: This covers things like response times, resource usage, and overall system efficiency. It's about ensuring your software components are pulling their weight.

Error Rates and Logging: Watching error rates and system logs helps catch issues before they become major problems. An uptick in error rates signals areas that need attention, preventing potential headaches.

User Experience Metrics: In the user-centric digital space, monitoring page load times and transaction success rates gives you a sense of how users are interacting with your software.

Security Metrics: No surprises here - monitoring security metrics is about keeping your software safe from cyber threats by identifying unusual activities.

Resource Utilization: Efficient use of resources is vital. Keep an eye on how your software uses resources to optimize performance and avoid bottlenecks.

To be more precise, here are some examples of common metrics used in software monitoring:

Response Time:

Definition: The time it takes for a system to respond to a user request.

Example: The average response time for HTTP requests served by a web server.

CPU Utilization:

Definition: The percentage of the central processing unit (CPU) that is being used by the system.

Example: Monitoring CPU utilization to ensure it stays within acceptable levels to prevent performance degradation.

Memory Usage:

Definition: The amount of RAM (random access memory) being used by a system.

Example: Tracking memory usage to identify potential memory leaks or inefficient resource utilization.

Error Rate:

Definition: The percentage of requests or transactions that result in errors.

Example: Monitoring the error rate of a database query to identify issues that may impact user experience.

Throughput:

Definition: The rate at which a system processes requests or transactions.

Example: Measuring the number of transactions processed per second by an application server.

Network Latency:

Definition: The time it takes for data to travel between two points on a network.

Example: Monitoring network latency to ensure timely communication between distributed components.

Disk I/O (Input/Output):

Definition: The rate at which data is read from or written to a storage device.

Example: Tracking disk I/O to prevent bottlenecks and ensure efficient data storage and retrieval.

Availability:

Definition: The percentage of time that a system is operational and available for use.

Example: Measuring the availability of a web service to ensure it meets service level agreements (SLAs).

Concurrency:

Definition: The number of users or processes concurrently interacting with a system.

Example: Monitoring the concurrency of a messaging system to prevent overload and ensure responsiveness.

Security Metrics:

Definition: Metrics related to the security and integrity of a system, including failed login attempts, security incidents, and unauthorized access attempts.

Example: Tracking the number of security events and identifying patterns that may indicate a potential security threat.

These examples represent just a fraction of the diverse set of metrics that can be monitored in a software system. The choice of metrics depends on the specific goals, requirements, and characteristics of the application or infrastructure being monitored.

Distinguishing Symptoms from Causes in Monitoring

Effectively managing your monitoring system involves addressing two fundamental questions: what's broken, and why? The "what's broken" aspect signifies the symptom, while understanding the "why" delves into the underlying cause, which may be an intermediate factor. In the section below, I illustrate hypothetical symptoms paired with their corresponding causes to emphasize this critical distinction.

Hypothetical Symptoms and Causes

Increased latency in user interactions

Cause: Network congestion due to a sudden surge in traffic or a DDoS attack

Elevated error rates during peak hours

Cause: Insufficient server capacity to handle the increased load

Inconsistent application performance

Cause: Memory leaks in the application code affecting system stability

Authentication failures for specific users

Cause: Recent security patch causing compatibility issues with user authentication

Sudden drops in website traffic

Cause: Content not properly indexed by search engines after a website redesign

Unexplained spikes in resource utilization

Cause: Inefficient database queries leading to excessive server resource consumption

Unavailability of a critical service

Cause: Hardware failure in the primary server or data center

Anomalous access patterns to sensitive data

Cause: Unauthorized user attempting to exploit vulnerabilities in the system

Slow response times for geographically distant users

Cause: Inadequate Content Delivery Network (CDN) coverage in certain regions

Discrepancies in reported user metrics

Cause: Incorrect configuration of analytics tools affecting data accuracy

The distinction between "what" and "why" stands as a cornerstone in crafting effective monitoring strategies, ensuring maximum signal and minimum noise in your system observations.

Black-Box vs. White-Box Monitoring

White-box and black-box monitoring are two distinct approaches in the field of system monitoring, each offering unique perspectives on system health and performance.

White-Box Monitoring:

Definition: White-box monitoring involves inspecting and analyzing the internal workings of a system, typically through direct access to system components, logs, and instrumentation.

Focus: It is concerned with understanding the internal state of the system, detecting potential issues, and predicting problems before they manifest as symptoms.

Example: Monitoring the performance of a database by analyzing query execution times, examining logs for errors, and tracking resource utilization within the database server.

Black-Box Monitoring:

Definition: Black-box monitoring focuses on observing the system's external behavior and responses without direct access to its internal components. Black-box monitoring is based on externally visible behavior from the user’s perspective.

Focus: It is primarily symptom-oriented, identifying and alerting when there is an active issue or deviation from expected behavior.

Example: Monitoring a web application by assessing response times, checking for error codes in HTTP responses, and alerting when the system experiences downtime without delving into the specifics of internal processes.

In summary, white-box monitoring looks inside the system, examining its internals for potential issues and predicting problems. Black-box monitoring, on the other hand, observes the system's external behavior, providing insights into active symptoms and deviations from expected performance without detailed knowledge of internal processes. Combining these approaches can offer a comprehensive understanding of a system's health and facilitate effective issue detection and resolution.

In my upcoming blogposts, I am planning on diving more into the topic of monitoring together with the strategies and tools that you can use to have a long term monitoring methodology for your software applications.

Thumbnail Photo by Luke Chesser on Unsplash

Thanks for reading my post! Let’s stay in touch 👋🏼

🐦 Follow me on Twitter for real-time updates, tech discussions, and more.

🗞️ Subscribe to this newsletter for weekly posts.