What I learned from Google's Introduction to Generative AI Course

Insights and takeaways from Google's Generative AI Course

I’m currently diving deep into Google’s “Introduction to Generative AI Learning Path”, diligently crafting comprehensive notes as I navigate through the initial module, “Introduction to Generative AI”. In this blog post, I’m excited to share these meticulously organized insights, hoping they prove to be a valuable resource for anyone perusing this article. As you scroll through, one section at a time, you’ll gain a holistic grasp of the entire course.

Is It AI, Machine Learning, or Deep Learning?

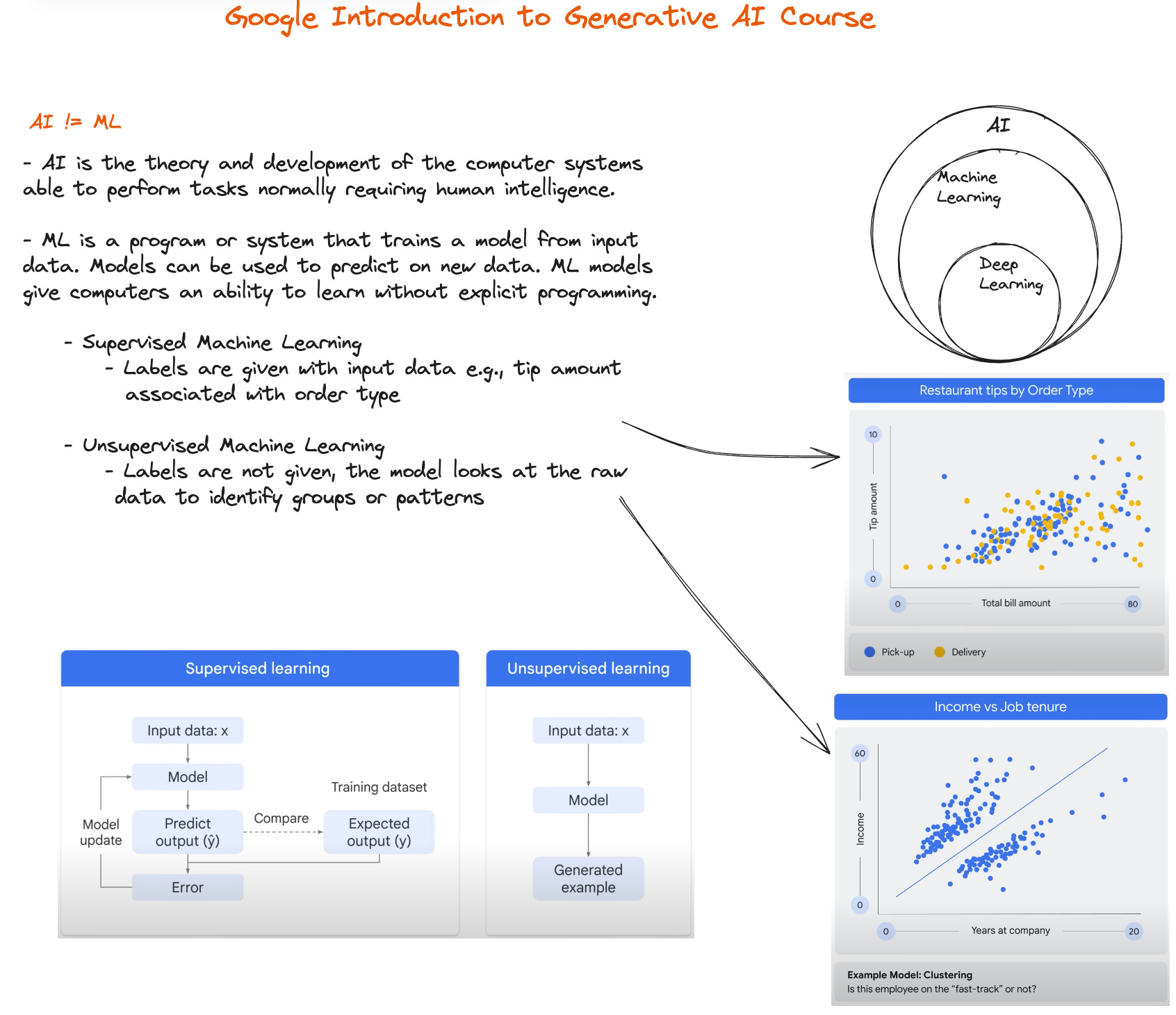

In today’s tech landscape, we often hear the buzzwords AI, ML, and Large Language Models thrown around so frequently that their distinctions can blur. AI, or artificial intelligence, encompasses the theory and development of computer systems or machines capable of human-level intelligence. It's a broad term encompassing a diverse array of technologies, including machine learning, natural language processing, computer vision, and more. AI systems are specialized for specific tasks, excelling in domains like chatbots, image recognition, recommendation systems, and gaming.

Machine Learning (ML), on the other hand, is a subset of AI leveraging statistical techniques to enable computer systems to learn and improve from experience, without explicit programming. ML algorithms can analyze data, identify patterns, and make predictions, making them crucial in applications like recommendation systems, image recognition, and data analysis.

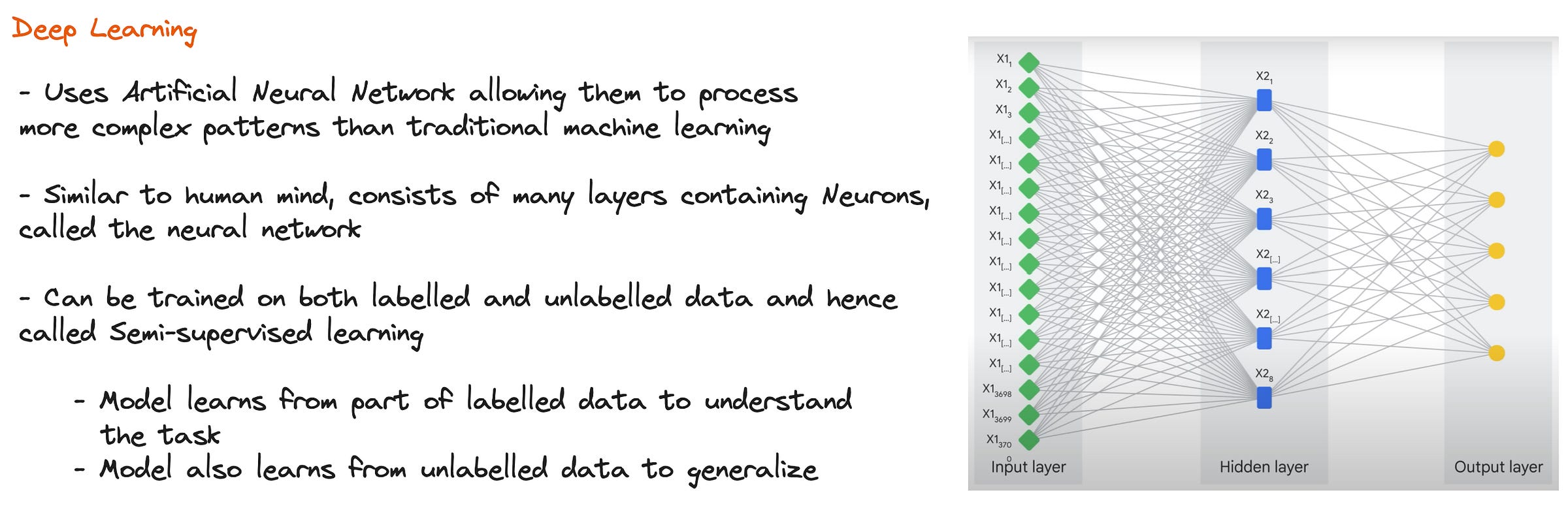

Deep Learning (DL) is a subfield of ML that focuses on neural networks with multiple layers (deep neural networks) to automatically learn and represent data for more complex tasks. DL has revolutionized areas like computer vision and natural language processing, enabling breakthroughs in image recognition, language translation, and speech recognition.

Generative AI and LLMs

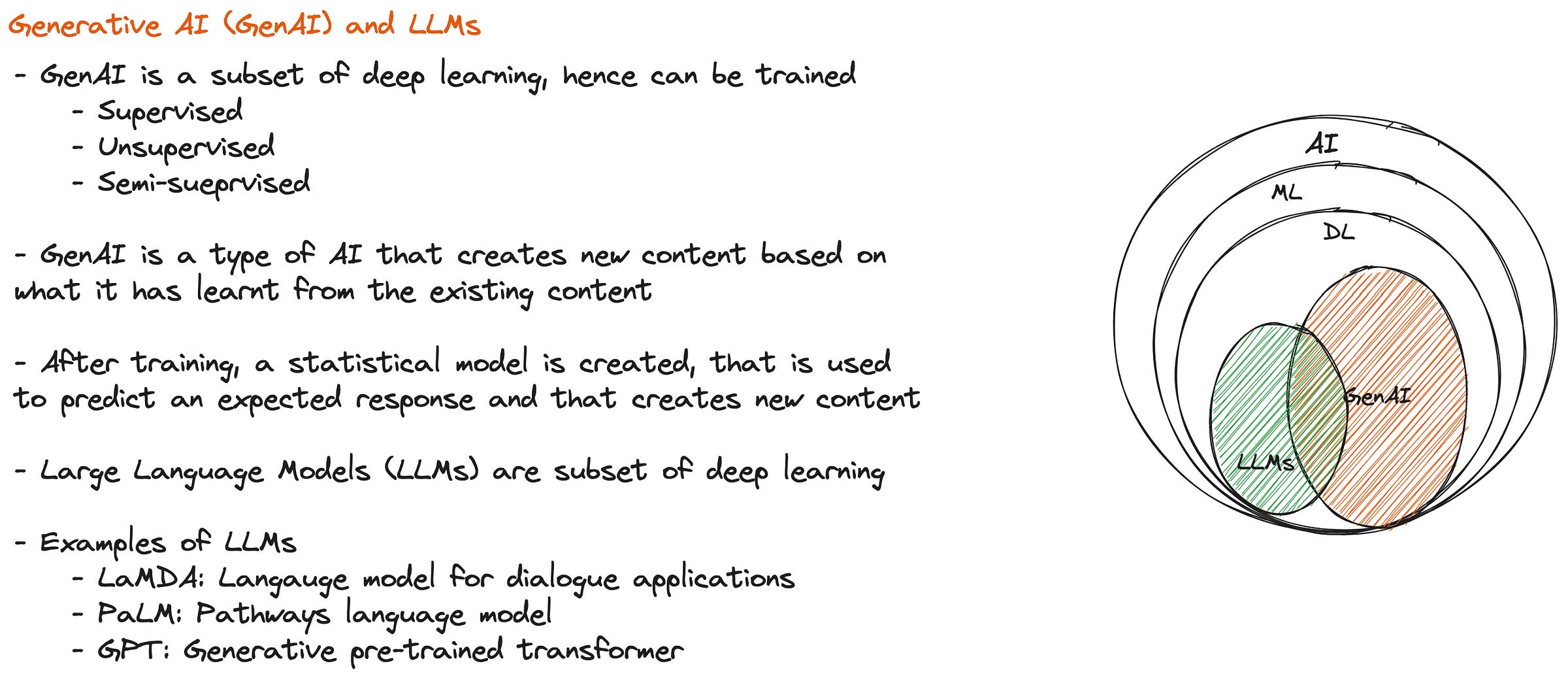

Generative AI and Large Language Models (LLMs) both fall under the umbrella of deep learning, and their domains significantly intersect, as illustrated in the figure below. These cutting-edge technologies harness massive datasets to decipher patterns and representations within the data. Generative AI models, exemplified by the likes of Generative Adversarial Networks (GANs), and massive language models like the renowned GPT-3, undergo rigorous training on extremely large datasets to capture and produce meaningful information.

Generative AI is primarily harnessed to generate fresh, innovative content, spanning the realms of imagery, music, text, and various data formats. Its applications extend to creative pursuits such as generating art, crafting content, and enhancing datasets.

Conversely, Large Language Models are primarily engineered to excel in natural language processing tasks, such as text generation, summarization, translation, and answering questions. Although their prowess includes text generation, their primary focus is rooted in language-centric endeavours.

Discriminative vs. Generative Models

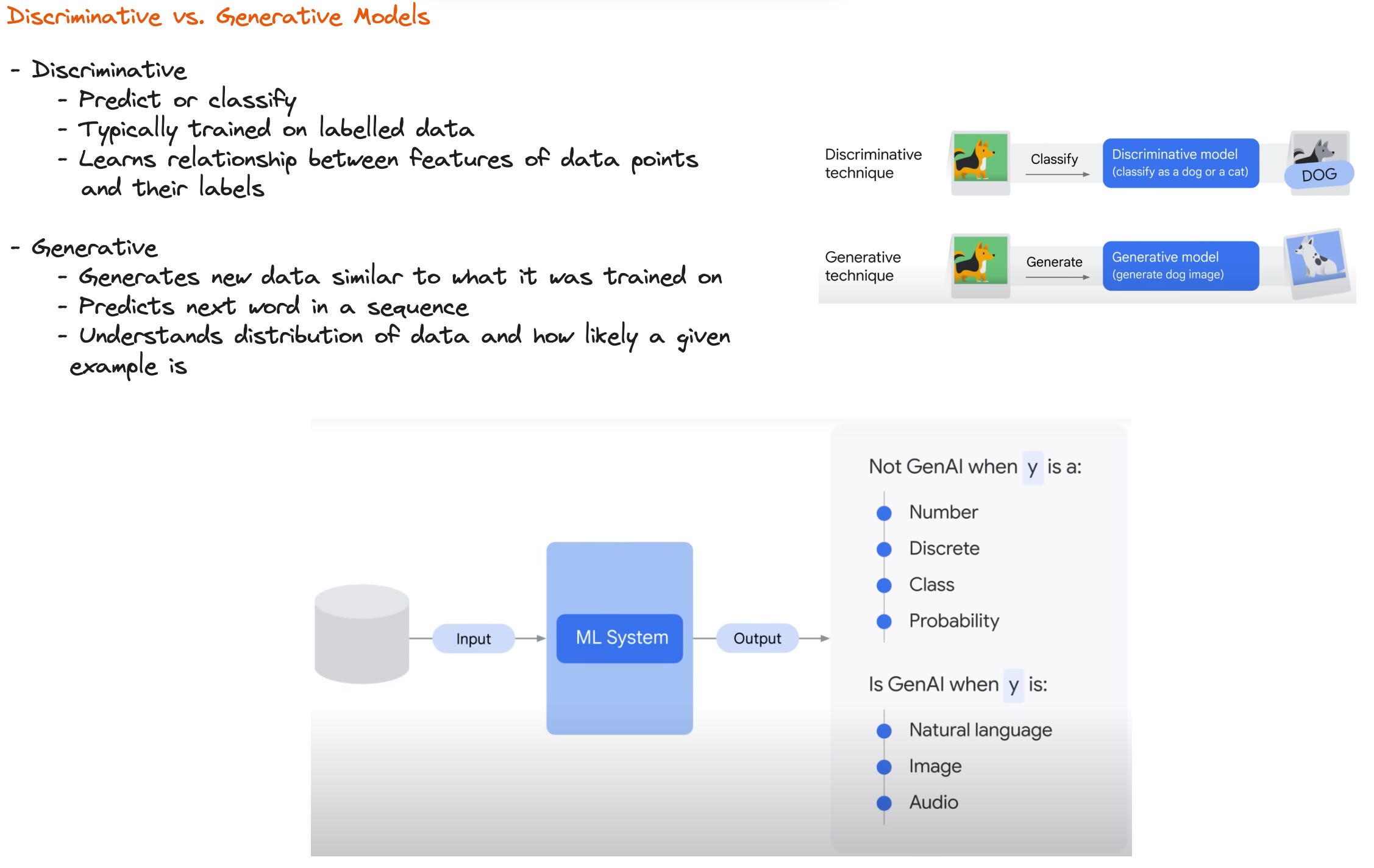

Discriminative and generative models are two fundamental types of machine learning models with distinct purposes. Discriminative models focus on modeling the conditional probability of an output variable given an input, making them ideal for classification tasks. They aim to learn the boundary that separates different classes in the data, making decisions based on these learned patterns.

In contrast, generative models concentrate on modeling the joint probability distribution of both input and output, enabling them to generate new data samples that resemble the training data. They are commonly used for tasks like data synthesis, image generation, and natural language processing, where capturing the underlying data distribution is crucial for creativity and data augmentation. Each type has its strengths and use cases, and the choice between discriminative and generative models depends on the specific problem and objectives at hand.

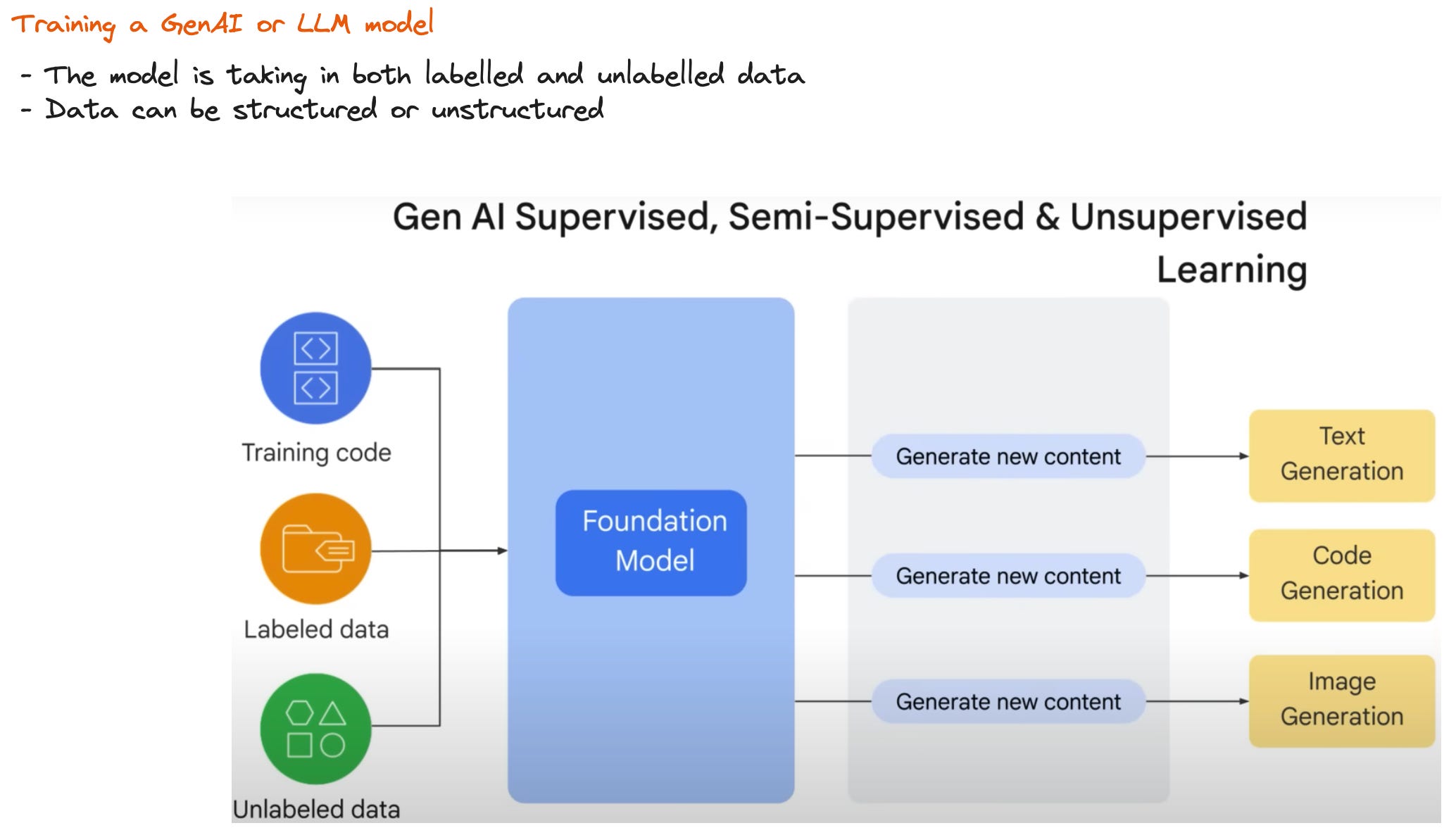

Training and Data for GenAI Models

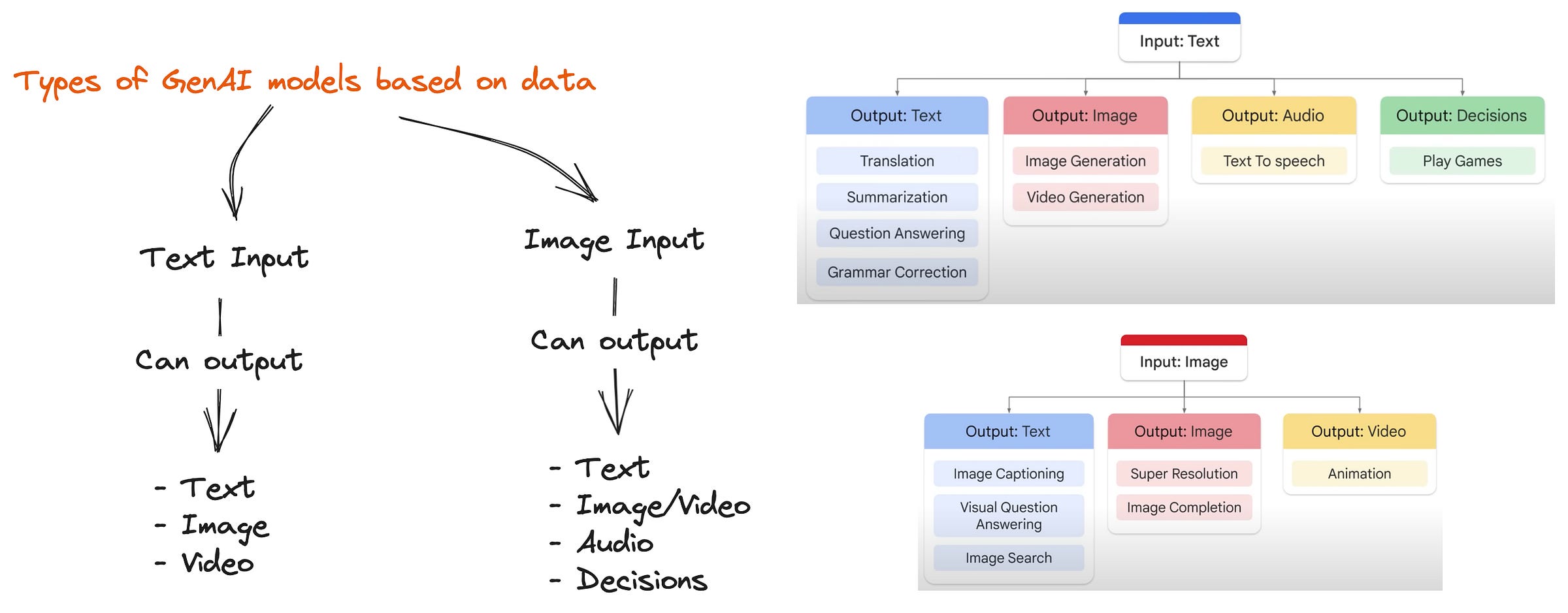

The training process for generative models is a versatile endeavor, encompassing both labeled and unlabeled data. Moreover, these models adapt seamlessly to structured or unstructured data, making them remarkably flexible. Their capabilities span a wide array of tasks, from the finesse of text summarization and clever text rephrasing to the intricacies of question answering, language translations, and even the remarkable feat of conjuring images and videos from textual descriptions. Not to mention their prowess in crafting captivating captions and delving into a multitude of other creative possibilities, all eloquently depicted in the illustrative charts below.

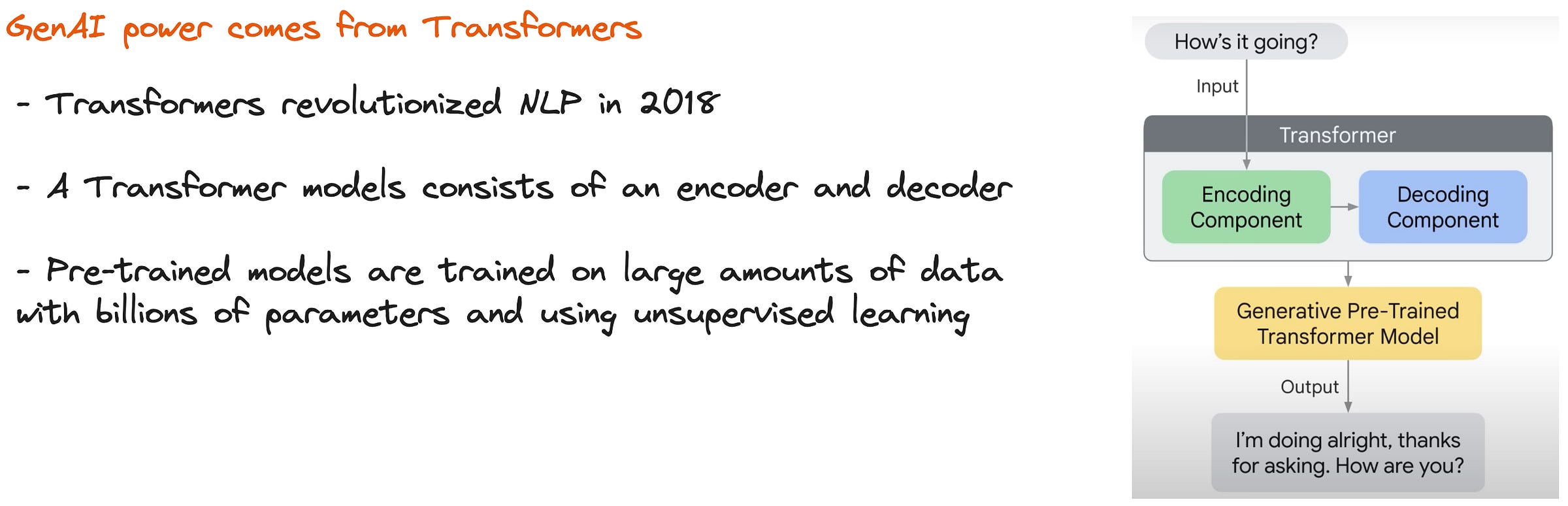

Transformer Models

Transformer models are a type of neural network architecture that revolutionized natural language processing and enabled generative AI. They were introduced in the paper "Attention is All You Need" by Vaswani et al. in 2017. Transformers rely on a mechanism called self-attention to process input data in parallel, making them highly efficient and effective for tasks like language translation, text generation, and more, leading to breakthroughs in generative AI, such as OpenAI's GPT-3, which can generate coherent and contextually relevant text across a wide range of applications.

Output Accuracy

While GenAI models often deliver impressive accuracy, it's crucial to acknowledge their imperfections. These models, while highly capable, are not infallible and can sometimes generate erroneous or hallucinatory information. Consequently, it remains essential for human oversight and validation to ensure the responses are 100% reliable.

Prompts

Mitigating hallucinations involves steering clear of the factors mentioned above, but it's equally important to understand that the model's output is intricately tied to the prompt it receives. The input to the model, termed a "prompt," plays a pivotal role in shaping the output. A prompt, typically a concise piece of text, carries the potential to provide critical context, allowing the model to craft nuanced and precisely tuned responses.

Further Reading

The course shared tons of reading material that can be very helpful in diving deeper into the topic. Here are some of my favourite articles:

Thanks for reading my post! Let’s stay in touch 👋🏼

🐦 Follow me on Twitter for real-time updates, tech discussions, and more.

🗞️ Subscribe to this newsletter for weekly posts.